Generative AI is a artificial intelligence techniques that involve creating, or generating new content, such as images, text, music, or even videos, based on patterns and data it has learned from. While the concept dates back to the 1960s with chatbots, the advent of generative adversarial networks (GANs) in 2014 brought new levels of realism and authenticity to AI-generated content. Today, generative AI is shaping industries and sparking debates on the boundaries of machine creativity.

Generative AI vs. Traditional AI

Generative AI and traditional AI represent two different approaches to artificial intelligence that have distinct characteristics and use cases. Let’s delve into the differences between these two paradigms:

Generative AI

Generative AI refers to a subset of artificial intelligence focused on generating new content, such as text, images, audio, or even videos. It often involves using deep learning models, particularly variants of the Generative Adversarial Network (GAN) and Recurrent Neural Network (RNN), to create data that resembles a given training dataset. GANs consist of two neural networks—the generator and the discriminator—competing against each other in a feedback loop to improve the quality of generated content. Generative AI has applications in various creative fields, such as art, music, text generation, and even data augmentation for training machine learning models.

| Pros | Cons |

|---|---|

| Produces novel and creative content | May produce unrealistic or nonsensical outputs |

| Useful for image synthesis, text generation, data augmentation | Requires significant computational resources |

| Applications in creative industries, content creation, simulation | Limited interpretability of generated outputs |

| Can generate diverse outputs | Difficult to control and fine-tune output content |

| Can assist in exploring new design possibilities | Training and optimizing generative models can be complex |

Traditional AI

Traditional AI, also known as rule-based or symbolic AI, involves programming explicit rules and logic into a system to enable it to perform specific tasks. These rules are often designed by human experts and follow a pre-defined set of instructions. Traditional AI systems excel at tasks that can be described using well-defined rules and logic, such as chess-playing programs or expert systems used in medical diagnosis.

| Pros | Cons |

|---|---|

| Transparent and interpretable reasoning | Limited flexibility with complex or unstructured data |

| Effective for tasks with clear rules and logic | Difficulty handling nuanced and subjective decision-making |

| Can be less computationally demanding | Manual rule engineering can be time-consuming |

| Well-suited for tasks with explicit and well-defined problem-solving steps | Limited adaptation to new or changing situations |

| Easy to understand and modify rules | May struggle with real-world variability and uncertainties |

How does generative AI work?

Generative AI is a subset of artificial intelligence that focuses on creating new content that is similar to existing data. It involves using machine learning models to generate data that shares characteristics with a given training dataset. One of the most popular techniques used in generative AI is the Generative Adversarial Network (GAN), which consists of two neural networks, the generator and the discriminator, that work in tandem to produce realistic output.

Here’s how the basic process of generative AI, specifically using GANs, works:

1. Generator Network

The generator is a neural network that takes random noise as input and produces data samples (e.g., images, text, audio) as output. Initially, the generated data is random and lacks coherence.

2. Discriminator Network

The discriminator is another neural network trained to distinguish between real data samples from the training dataset and the fake data generated by the generator. It takes in both real and generated samples and assigns probabilities indicating whether each sample is real or fake.

3. Training Process

The training of a GAN involves an iterative process where the generator and discriminator networks compete against each other and improve over time:

- Initially, the generator produces low-quality output that the discriminator can easily distinguish from real data.

- The discriminator is trained on real data samples and the fake data generated by the generator. It learns to improve its ability to differentiate between real and fake samples.

- The generator’s goal is to produce data that is indistinguishable from real data. It learns by receiving feedback from the discriminator on its generated outputs.

- As training progresses, the generator becomes better at generating data that fools the discriminator, and the discriminator becomes better at discerning real from fake data.

4. Equilibrium (Convergence)

Ideally, the GAN training process reaches an equilibrium where the generator produces high-quality data that is nearly indistinguishable from real data. At this point, the discriminator struggles to differentiate between real and fake samples.

5. Generated Output

Once the GAN has been trained, the generator can be used independently to create new, realistic data samples that share characteristics with the training dataset. For instance, in image generation, the generator can produce images that resemble the images in the training dataset.

Generative AI can also involve other techniques beyond GANs, such as Variational Autoencoders (VAEs) and Recurrent Neural Networks (RNNs), depending on the type of data being generated.

The rise of deep generative models

Generative AI involves deep-learning models that learn to create new data resembling a given dataset. It encodes training data to generate similar yet distinct outputs. Variational autoencoders (VAEs) were pioneers, allowing scalable generative modeling by encoding and varying data.

VAEs set the stage for Generative Adversarial Networks (GANs) and other models that produce increasingly realistic but synthetic content. Transformers, introduced by Google, revolutionized language models, using an encoder-decoder architecture and attention mechanism to process and generate text.

Transformers are foundation models, versatile for tasks from classification to dialogue generation. They come in encoder-only (e.g., BERT), decoder-only (e.g., GPT), and encoder-decoder (e.g., T5) variants, each with unique strengths. Generative AI and large language models evolve rapidly, introducing new models and innovations frequently.

Common generative AI applications

Generative AI has found applications in various domains, producing creative and valuable outputs that range from images to text. Here are some common generative AI applications:

- Image Generation: Generative models like Generative Adversarial Networks (GANs) can produce realistic images that resemble those in a training dataset. This has applications in art, design, and even video game graphics.

- Text Generation: Language models, particularly transformer-based models like GPT (Generative Pre-trained Transformer), are used to generate coherent and contextually relevant text. This is used in chatbots, content creation, and even automated journalism.

- Style Transfer: Style transfer models use generative techniques to blend the style of one image with the content of another. This creates unique artistic effects and is used in applications like turning photographs into paintings.

- Data Augmentation: Generative AI can generate synthetic data to augment training datasets. This is particularly useful when there’s limited real-world data available for training machine learning models.

- Music Composition: Generative models can create music compositions based on existing pieces, styles, or patterns. This is used in music production, background music creation, and even generating jingles.

- Video Synthesis: Generative models can generate video sequences by extending patterns from existing videos. This is used in video editing, special effects, and virtual reality environments.

Generative AI continues to evolve, and its applications are expanding across various industries and creative fields. Its ability to generate new content that captures the essence of existing data makes it a powerful tool for creativity, innovation, and problem-solving.

Where is generative AI headed?

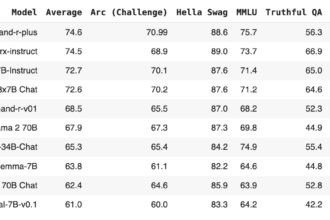

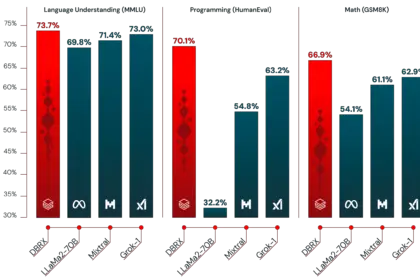

The future direction of generative AI is characterized by a shift from solely pursuing larger models to exploring smaller, domain-specialized models and model distillation. Historically, the focus had been on scaling up models for better performance, but recent evidence shows that smaller models trained on domain-specific data can outperform larger, general-purpose models in certain contexts.

Specialized models offer advantages in terms of cost-efficiency and environmental impact. Additionally, the emergence of model distillation, as demonstrated by efforts like the Alpaca chatbot, challenges the notion that large models are essential for advanced capabilities. This suggests that more compact models could serve a wide range of practical applications effectively. While generative AI holds promise, it also introduces legal, financial, and reputational risks, including generating inaccurate or biased information and encountering privacy and copyright issues.

What are Dall-E, ChatGPT and Bard?

Let’s delve into Dall-E, ChatGPT, and Bard, three prominent interfaces in the world of generative AI.

Dall-E stands out as a multimodal AI application. Trained on an expansive dataset comprising images and their textual descriptions, Dall-E demonstrates the capability to bridge connections across diverse media forms like text, vision, and audio. By linking words to visual components, it showcases its proficiency in deciphering context. Developed in 2021 utilizing OpenAI’s GPT framework, a more powerful version, Dall-E 2, emerged in 2022. This enhanced iteration empowers users to generate images in various styles based on their prompts.

ChatGPT, an AI-driven chatbot that made waves in November 2022, is grounded in OpenAI’s GPT-3.5 implementation. Unlike previous GPT iterations accessible solely through an API, ChatGPT enables interaction and fine-tuning of text responses through an interactive chat interface. Notably, GPT-4 was unveiled on March 14, 2023. ChatGPT integrates conversation history with users, emulating lifelike interactions. Recognizing the success of this novel interface, Microsoft not only invested significantly in OpenAI but also integrated a GPT version into its Bing search engine.

Bard, Google’s response to the transformer AI trend, initially focused on language processing and protein analysis. Google shared some of these models with the research community, although no public interface was officially launched. Microsoft’s decision to integrate GPT into Bing prompted Google to swiftly introduce a public-facing chatbot, Bard. Built on a lighter version of Google’s LaMDA large language model family, Bard’s debut encountered turbulence. An erroneous statement by Bard about the Webb telescope’s role in discovering a planet in another solar system led to a significant drop in Google’s stock price. Meanwhile, ChatGPT and Microsoft’s early GPT attempts also faced criticism for inaccuracies and erratic behavior. Google responded by revealing an updated Bard version based on its advanced LLM, PaLM 2. This iteration enables Bard to respond more efficiently and visually to user queries, signaling Google’s commitment to refining its AI offerings.

PaLM API & MakerSuite

Google introduces PaLM API & MakerSuite to facilitate generative AI app development. The PaLM API provides access to various language models for content generation, chat, summarization, and more. MakerSuite simplifies workflow by aiding prompt iteration, data augmentation, model tuning, and code export. Tuning models, generating synthetic data, and using embeddings become more accessible. Safety measures follow Google’s AI Principles, allowing developers to define responsibility. For scalability, Google Cloud Vertex AI offers support, security, and advanced capabilities.

What are the concerns surrounding generative AI?

Generative AI, while a powerful and promising technology, also comes with several concerns that have been raised by researchers, ethicists, and the general public. Some of the main concerns include:

- Misinformation and Fake Content: Generative AI can be used to create highly convincing fake content, such as deepfake videos and realistic-looking news articles. This raises concerns about the spread of misinformation, as it becomes increasingly difficult to discern what is real and what is fabricated.

- Privacy Violations: Generative AI can be used to generate realistic images of people who don’t actually exist, potentially leading to privacy violations and identity theft. It becomes challenging to protect individuals’ personal information when it’s easy to generate fake data.

- Ethical Use of Technology: There are concerns about the ethical use of generative AI in various applications, including creating explicit or offensive content, violating copyrights, and manipulating public opinion. Decisions on how to use this technology responsibly and ethically become important.

- Bias and Fairness: Generative AI models can inadvertently learn biases present in their training data. This can result in generated content that perpetuates stereotypes, discrimination, and other forms of bias, which can have negative societal impacts.

- Unintended Consequences: As generative AI becomes more advanced, there’s a concern that it might be used for unintended purposes that could potentially be harmful. These unintended consequences could range from unintended biases in generated content to the creation of malicious tools.

- Job Displacement: In creative fields like writing, art, and design, generative AI has the potential to automate tasks that were traditionally done by humans. This could lead to job displacement for professionals in these fields.

- Security Risks: The use of generative AI in creating convincing phishing emails, social engineering attacks, or even generating fake credentials can pose security risks by making it harder to distinguish between legitimate and malicious content.

- Regulation and Legal Challenges: The rapid advancement of generative AI technology can outpace regulatory frameworks, making it difficult to enforce rules and laws around its use. Legal challenges might arise when determining responsibility for content generated by AI.

- Depersonalization of Content: The ease with which generative AI can create content might lead to a flood of impersonal and mass-produced material, which could potentially decrease the value of human creativity and craftsmanship.

- Loss of Trust: The prevalence of fake content generated by AI could erode trust in media, online platforms, and even human-generated content, leading to a broader sense of skepticism and uncertainty.

Addressing these concerns requires a multifaceted approach involving collaboration among researchers, policymakers, ethicists, and the technology industry. It’s important to develop guidelines, regulations, and responsible practices to ensure that generative AI is used in ways that benefit society while minimizing its potential negative impacts.

History of Generative AI

Generative AI, also known as artificial intelligence that creates data, has a history that spans several decades. Here’s a brief overview of its evolution:

- Early Beginnings (1950s-1970s): Theoretical foundations laid for AI and generative systems, limited by computational capabilities.

- Expert Systems (1980s): Rule-based expert systems developed to replicate human expertise.

- Neural Networks Resurgence (1990s-2000s): Neural networks regained interest, but deep learning gained traction later due to limited computing power.

- Deep Learning and Generative Models (2010s): Rise of deep learning, with GANs and VAEs making significant contributions to generative AI.

- Text Generation and Language Models: GPT series brought impressive text generation capabilities around 2018.

- Art and Creativity (2010s-Present): Generative AI applied to creative fields like art, music, and design.

- Applications and Concerns (2010s-Present): Expansion into image synthesis, style transfer, raising concerns about deepfakes, bias, and ethics.

- Continual Advancements (Present and Beyond): Ongoing research, refining techniques, addressing ethical considerations, and shaping the technology’s trajectory.

The future of generative AI

The future of generative AI holds great promise and potential for various domains. Here are some key directions and possibilities:

- Advanced Creative Expression: Generative AI will likely play a significant role in art, music, and literature, helping creators push the boundaries of creativity by providing novel ideas and inspiration.

- Personalization: AI-generated content could be tailored to individual preferences, enhancing user experiences in entertainment, marketing, and more.

- Medical and Scientific Innovation: Generative AI might aid in drug discovery, protein folding, and simulating complex biological processes, leading to breakthroughs in medicine and science.

- Design and Architecture: AI-generated designs could streamline architectural and industrial design processes, considering both functional and aesthetic aspects.

- Entertainment and Gaming: AI-generated characters, narratives, and environments could create more immersive and dynamic entertainment experiences.

- Virtual Worlds: AI-generated content could populate virtual worlds with diverse landscapes, creatures, and stories, enriching virtual reality experiences.

- Sustainable Solutions: Generative AI might assist in designing energy-efficient structures, optimizing supply chains, and developing environmentally friendly products.

- Education: AI-generated educational materials could adapt to individual learning styles, making learning more engaging and effective.

- Human-AI Collaboration: Generative AI could be used to augment human creativity, assisting professionals in generating ideas and prototypes.

- Ethical Considerations: Addressing bias, transparency, and accountability will remain crucial to ensure the responsible and fair use of generative AI.

- Regulation and Policy: As generative AI becomes more prevalent, regulations might be developed to govern its use, protecting against misuse and ensuring ethical standards.

- Interdisciplinary Collaboration: Collaboration between AI researchers, ethicists, artists, scientists, and policymakers will shape the evolution of generative AI.

- Challenges to Overcome: Research will continue to focus on improving the quality, diversity, and control of generated content while mitigating unintended consequences and negative impacts.

- AI in Assistive Technologies: Generative AI could help develop tools and solutions that aid people with disabilities in various aspects of their lives.

- Privacy and Security: Developing techniques to protect against the malicious use of generative AI, such as deepfake attacks, will be crucial.

The future of generative AI will likely be characterized by ongoing advancements, expanded applications, and a growing need for responsible development and deployment. As the technology matures, it will be essential to strike a balance between innovation and ethical considerations to ensure its positive impact on society.

FAQs

Below are some frequently asked questions about Generative AI.

Who created generative AI?

Generative AI was pioneered by Joseph Weizenbaum in the 1960s with the creation of the Eliza chatbot. Eliza was one of the earliest examples of generative AI, using a rules-based approach to engage in text-based conversations with users. This laid the foundation for the development of AI systems that could generate content and responses based on input data and prompts. Since then, the field of generative AI has significantly evolved with the advent of deep learning techniques and neural networks, leading to the creation of more advanced generative models like GANs, VAEs, and large language models.

Can Generative AI Replace Jobs?

Generative AI has the potential to both replace and create jobs. It can automate tasks like content generation and data analysis, potentially displacing some roles. However, it can also create new opportunities in AI development, customization, and management. While certain jobs may be automated, generative AI can also enhance human capabilities and lead to new roles in AI-related fields. The impact on the job market will depend on adoption rates and workforce adaptability.

How do i build a generative AI model?

To build a generative AI model:

Define Task: Clearly state what content you want the model to generate, like text, images, etc.

Collect Data: Gather diverse, relevant data for training.

Preprocess Data: Clean, format, and prepare data for training.

Choose Model: Select an appropriate architecture like GANs, VAEs, or Transformers.

Set Parameters: Configure model settings and hyperparameters.

Train Model: Feed data to the model for learning patterns.

Evaluate: Measure model performance using metrics.

Fine-Tune: Adjust model based on evaluation results.

Generate Content: Use the trained model to create new content.

Deploy: Integrate the model into applications.

Monitor & Update: Keep track of performance and update as needed.