Gemma, a open source language model of Google Deepmind’s, has been made available to the online community via Hugging Face. It is available in two sizes, 2 billion and 7 billion parameters, and comes with both pre-trained and instruction-tracked versions. You can use it on Github, with TGI support, or deploy and fine-tune it with Vertex Model Garden and Google Kubernetes Engine for easy deployment and refinement.

To ensure optimal integration with the Hugging Face ecosystem, Google and Hugging Face collaborated. The Hub offers access to the 4 open-access models, including 2 basic models and 2 fine-tuned ones.

What is Gemma?

Gemma is a family of 4 new LLM models by Google based on Gemini. Two sizes, 2 billion and 7 billion parameters, were used to categorize Gemma, a set of large language models. Writing, translating, and answering questions are among the many functions that these models can perform. These models can be used on a variety of computers without special techniques to reduce their size and can retain information from up to 8,000 words of text.

- gemma-2b: Base 2B model.

- gemma-2b-it: Instruction fine-tuned version of the base 2B model.

- gemma-7b: Base 7B model.

- gemma-7b-it: Instruction fine-tuned version of the base 7B model.

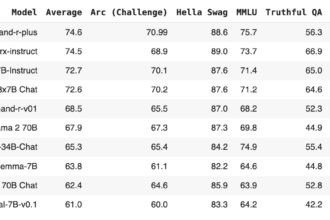

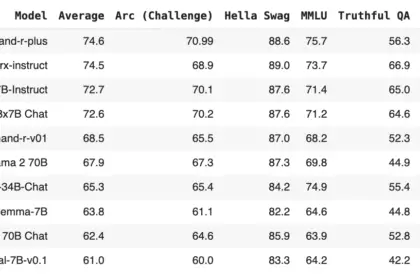

In what sense are the Gemma models good? Below is a summary of the base models’ performance on the LLM Leaderboard in relation to other open models (higher scores indicate better performance):

| Model | License | Commercial use? | Pretraining size [tokens] | Leaderboard score ⬇️ |

|---|---|---|---|---|

| LLama 2 70B Chat (reference) | Llama 2 license | ✅ | 2T | 67.87 |

| Gemma-7B | Gemma license | ✅ | 6T | 63.75 |

| DeciLM-7B | Apache 2.0 | ✅ | unknown | 61.55 |

| PHI-2 (2.7B) | MIT | ✅ | 1.4T | 61.33 |

| Mistral-7B-v0.1 | Apache 2.0 | ✅ | unknown | 60.97 |

| Llama 2 7B | Llama 2 license | ✅ | 2T | 54.32 |

| Gemma 2B | Gemma license | ✅ | 2T | 46.51 |

Prompt format

The base models have no prompt format. They can be used to maintain an input sequence with a plausible continuation or for zero-shot/few-shot inference, just like other base models. They provide a solid foundation for fine-tuning your own use cases. The Instruct versions feature a basic conversation structure:

<start_of_turn>user

knock knock<end_of_turn>

<start_of_turn>model

who is there<end_of_turn>

<start_of_turn>user

LaMDA<end_of_turn>

<start_of_turn>model

LaMDA who?<end_of_turn>This format must be reproduced exactly for effective use.

Using gemma-7b-it with Transformers

Here’s a quick rundown on how to use gemma-7b-it with transformers. The system mandates about 18 GB of RAM, which comprises consumer GPUs like 3090 or 4090.

from transformers import AutoTokenizer, pipeline

import torch

model = "google/gemma-7b-it"

tokenizer = AutoTokenizer.from_pretrained(model)

pipeline = pipeline(

"text-generation",

model=model,

model_kwargs={"torch_dtype": torch.bfloat16},

device="cuda",

)

messages = [

{"role": "user", "content": "What is Fine Tuning? Write in one line."},

]

prompt = pipeline.tokenizer.apply_chat_template(messages, tokenize=False, add_generation_prompt=True)

outputs = pipeline(

prompt,

max_new_tokens=256,

do_sample=True,

temperature=0.7,

top_k=50,

top_p=0.95

)

print(outputs[0]["generated_text"][len(prompt):])Fine tuning is a machine learning technique that involves adjusting the parameters of a pre-trained model to fit a specific downstream task.How to Finetune Gemma Models with HuggingFace and PEFT

Users are required to sign a consent form before they can access Gemma model artifacts.

In addition, Gemma models are compatible with torch.compile() with CUDA graphs, resulting in a ~4x inference time speedup!

To use Gemma models with transformers, make sure to use the latest transformers release:

pip install -U "transformers==4.38.1" --upgrade1. Download the Model and Tokenizer

- The Hugging Face Hub enables users to view the model artifacts after filling in the consent form.

- Users who have submitted a consent form can access model samples from the Hugging Face Hub.

- This example only shows the download model and tokenizer with

BitsAndBytesConfigfor the weight quantization.

import torch

from transformers import AutoTokenizer, AutoModelForCausalLM, BitsAndBytesConfig

model_id = "google/gemma-2b"

bnb_config = BitsAndBytesConfig(

load_in_4bit=True,

bnb_4bit_quant_type="nf4",

bnb_4bit_compute_dtype=torch.bfloat16

)

tokenizer = AutoTokenizer.from_pretrained(model_id, token=os.environ['HF_TOKEN'])

model = AutoModelForCausalLM.from_pretrained(model_id, quantization_config=bnb_config, device_map={"":0}, token=os.environ['HF_TOKEN'])2. Test model

Now, lets test model before starting the finetuning:

text = "Qoute: The greatest glory in living"

device = "cuda:0"

inputs = tokenizer(text, return_tensors="pt").to(device)

outputs = model.generate(**inputs, max_new_tokens=20)

print(tokenizer.decode(outputs[0], skip_special_tokens=True))The model makes a reasonable complement with some additional tokens:

Qoute: The greatest glory in living lies not in never falling, but in rising every time we fall.

-Nelson MandelaHowever, this is not the format we would prefer for the answer to follow. Let’s finetune this model to generate the output in following format:

Qoute: The greatest glory in living lies not in never falling, but in rising every time we fall.

Author: Nelson Mandela3. start the finetuning process

Ok. Let’s begin the process of fine-tuning. We will use the English quotes dataset for this purpose.

from datasets import load_dataset

data = load_dataset("Abirate/english_quotes")

data = data.map(lambda samples: tokenizer(samples["quote"]), batched=True)4. Use LoRA config

And now we use LoRA config:

import transformers

from trl import SFTTrainer

def formatting_func(example):

text = f"Quote: {example['quote'][0]}\nAuthor: {example['author'][0]}"

return [text]

trainer = SFTTrainer(

model=model,

train_dataset=data["train"],

args=transformers.TrainingArguments(

per_device_train_batch_size=1,

gradient_accumulation_steps=4,

warmup_steps=2,

max_steps=10,

learning_rate=2e-4,

fp16=True,

logging_steps=1,

output_dir="outputs",

optim="paged_adamw_8bit"

),

peft_config=lora_config,

formatting_func=formatting_func,

)

trainer.train()5. Test Finetuned Model

After finishing this task, we can start examining the fine-tuned Gemma using the similar prompt we used previously:

text = "Qoute: The best and most beautiful things in"

device = "cuda:0"

inputs = tokenizer(text, return_tensors="pt").to(device)

outputs = model.generate(**inputs, max_new_tokens=20)

print(tokenizer.decode(outputs[0], skip_special_tokens=True))The best and most beautiful things in the world cannot be seen or even touched - they must be felt with the heart.

Author: Helen KellerWhy PEFT?

Language models are commonly trained using memory and compute-intensive methods, even for small sizes. This can be problematic for users who rely on openly available compute platforms like Colab or Kaggle for learning and experimentation. Additionally, the cost of adapting these models to different domains is a crucial consideration for enterprise users, making PEFT scalable and cost-effective.

PyTorch on GPU and TPU

Hugging Face transformers’ for gemma models are optimized for PyTorch and PyTorch/XLA. This makes Gemma models available for TPU and GPU users to access and experiment with as needed. TPU acceleration via PyTorch/XLA is also made possible for other Hugging Face models by this FSDP via SPMD integration.

Next Steps

We proceeded to examine a basic example that was taken from the source notebook and demonstrated the LoRA fine tuning technique for Gemma models. The complete colab for GPU is available here, and the complete script for TPU can be found here.

Let’s Wrap

In this work, a new family of open language models called Gemma is introduced. Gemma performs well on academic benchmarks for language understanding, safety, and reasoning. Two model sizes (2 billion and 7 billion parameters) are made available, along with pretrained and fine-tuned checkpoints. On 11 out of 18 text-based tasks, Gemma outperforms similarly sized open models. Hugging Face also provide thorough assessments of the models’ safety and responsibility features, along with a thorough explanation of our model’s development. We think that enhancing the safety of frontier models and facilitating the subsequent wave of LLM innovations depend on the responsible release of LLMs.