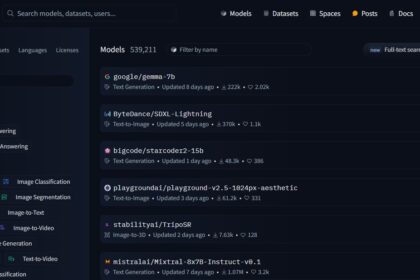

DeepLearning.AI has recently announced a new short course titled “Open Source Models with Hugging Face 🤗,” instructed by the skilled team at Hugging Face.

As many of you already know, Hugging Face has been a game changer that enable developers to easily access thousands of pre-trained open source models to assemble into new applications. This course covers the best practices for building in this manner, including how to identify and select models.

What will you learn in this course

Using the Transformers library, you’ll learn to navigate through different models for text, audio, and image segmentation (zero-shot image Segmentation, zero- shot audio classification, or speech recognition). Additionally, you’ll gain expertise in multimodal models for visual question answering, image search, and image captioning. Demonstration of your creations can be done locally, on the cloud, or through an API using Gradio and Hugging Face Spaces.

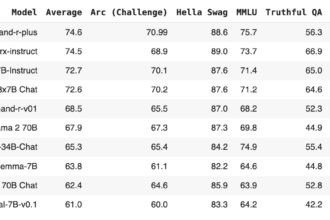

- Explore Hugging Face Hub by identifying and sorting open source models according to task, ranking, and memory requirements.

- Use the transformers library to create code for text, audio, image, and multimodal tasks with only a few lines of code.

- With Gradio and Hugging Face Spaces, you can easily distribute your AI apps with a user-friendly interface or via API and then run them on the cloud.

Course Workflow

In this course, you’ll be able to choose from several open source models from Hugging Face Hub for NLP, audio, image, and multimodal tasks, all of which are made possible with the Hugge Face transformers library. Convert your code into a simple app that can be run on the cloud with Gradio and Hugging Face Spaces.

The short course covers:

- Turn a small language model into an interactive chatbot with the ability to respond to follow-up questions by using the transformers library.

- Use translations between languages, summarize documents, and compare two pieces of text to find and retrieve information.

- Use Text to Speech (TTS) to convert text to audio and Automatic Speech Recognition (ASR) to convert audio to text.

- Conduct zero-shot audio classification without modifying the design.

- Create an audio description of an image by using both object detection and text-to-speech models.

- Identify the object you want to select by using a zero-shot image segmentation model that uses points to identify regions or objects in an image.

- Execute multimodal tasks such as visual query, image searching, and captioning.

- Utilize Gradio and Hugging Face Spaces to share your AI app, which will operate in a user-friendly interface on the cloud or as an API.

Instructors

- Maria Khalusova: Member of Technical Staff at Hugging Face

- Marc Sun: Machine Learning Engineer at Hugging Face

- Younes Belkada: Machine Learning Engineer at Hugging Face