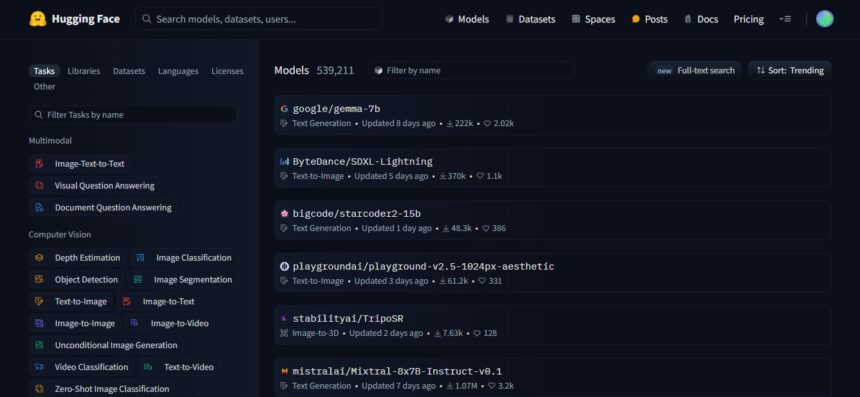

Transformers is a state-of-the-art machine learning library by Hugging Face designed to facilitate advanced techniques using PyTorch, TensorFlow, and JAX as the underlying frameworks. It’s provide thousands of pre-trained models related to text, vision and audio to perform different tasks or to fine tune.

Introduction

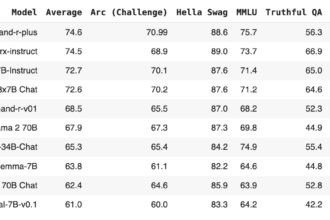

Hugging Face is an Machine Learning community platform created by Julien Chaumond, Clément Delangue, and Thomas Wolf in 2016. It aims to offer thousands of pre-trained models based on the state-of-the-art transformer architecture for data scientists, AI practitioners, and ML engineers.

The Transformers library enables the creation and utilization of those shared models. Anyone can download and use thousands of pre-referenced models from the Model Hub. You can also upload your own models on the Hub!

These models support common tasks in different modalities, such as:

📝 Natural Language Processing: Text Generation, Text Classification, Summarization, Translation, Named Entity Recognition, Question Answering, Language Modeling and Multiple Choice.

🖼️ Computer Vision: Object Detection, Image Classification, and Segmentation.

🗣️ Audio: Automatic Speech Recognition and Audio Classification.

🐙 Multimodal: Optical Character Recognition, Table Question Answering, Video Classification, Document Question Answering, and Visual Question Answering.

PyTorch, TensorFlow, and JAX are supported frameworks for transformers. It provides the ability to train a model in few lines of code with one framework and load it for inference in another.

Get started with Transformers

In this section, we will explore how to install the Transformers library in Python and use our first tool: the pipeline() function.

Transformers is tested on Python 3.6+, PyTorch 1.1.0+, TensorFlow 2.0+, and Flax. Install Transformers with the following command:

pip install transformersIt’s recommended to install Transformers in the virtual environment. You’ll also need to install your preferred machine learning framework:

pip install torchpip install tensorflowBefore we explore the functionality of Transformer models under the hood, let’s take a closer look at some interesting NLP problems.

Working with pipelines

The pipeline() method has the following structure:

from transformers import pipeline

# To use a default model & tokenizer for a given task(e.g. question-answering)

pipeline("<task-name>")

# To use an existing model

pipeline("<task-name>", model="<model_name>")

# To use a custom model/tokenizer

pipeline('<task-name>', model='<model name>',tokenizer='<tokenizer_name>')The Transformers library’s most basic object is the pipeline() function. By linking a model to its necessary preprocessing and postprocessor steps, we can input text directly and receive an understandable response:

from transformers import pipeline

classifier = pipeline("sentiment-analysis")

classifier("This hugging face course is great")[{'label': 'POSITIVE', 'score': 0.9998687505722046}]You can even pass more than one sentence! Take a look.

classifier(

["A great course by exnrt.com", "The offical course is difficult for beginners"]

)[{'label': 'POSITIVE', 'score': 0.9996315240859985},

{'label': 'NEGATIVE', 'score': 0.9986200332641602}]The pipeline, by default, selects a specific pretrained model that has been fine-tuned for English sentiment analysis. Upon creating the classifier object, the model is downloaded and stored in memory. Restarting the command will result in using the cached model instead of downloading it again.

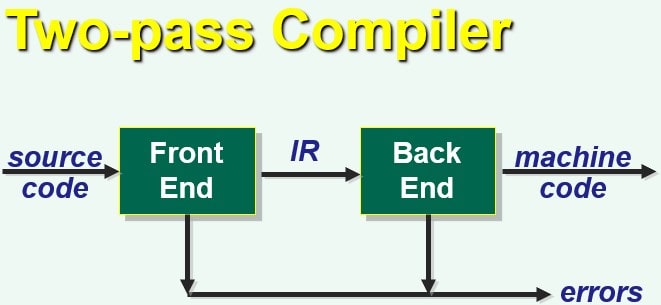

Three primary steps are involved in transferring text to a pipeline:

- In first step, the text is preprocessed in model understandable format.

- In second step, the preprocessed text is passed to the model.

- In third step, the model predictions are post-processed, so you can understand them.

Let’s take the example of using the pipeline() for automatic speech recognition.

from transformers import pipeline

transcriber = pipeline(task="automatic-speech-recognition")

#transcriber = pipeline(model="openai/whisper-large-v2")

transcriber("https://huggingface.co/datasets/Narsil/asr_dummy/resolve/main/mlk.flac"){'text': 'I HAVE A DREAM BUT ONE DAY THIS NATION WILL RISE UP LIVE UP THE TRUE MEANING OF ITS TREES'}Pipeline Parameters

Several parameters are supported by pipeline(), with some being task-specific and others being general for all pipelines. As general, you have the liberty to specify parameters in any manner you choose. Let’s check out 3 important ones:

Device

The model is automatically loaded onto the device specified by the pipeline when device=n is used. This will work regardless of whether you are using PyTorch or Tensorflow.

transcriber = pipeline(model="openai/whisper-large-v2", device=0)Batch size

By default, batch inference is not set in pipelines. Batching may not be the quickest process, but it can also be slower in certain situations. But if it works in your use case, you can use:

transcriber = pipeline(model="openai/whisper-large-v2", device=0, batch_size=2)

audio_filenames = [f"https://huggingface.co/datasets/Narsil/asr_dummy/resolve/main/{i}.flac" for i in range(1, 5)]

texts = transcriber(audio_filenames)The pipeline is able to execute on the 4 audio files provided by the user, but it will send them in batches of 2 to the model using GPU-based batching without any additional code. Batching is unnecessary as the output should always be in line with what you would have received. This is just as a way to help you get more speed out of the pipeline.

Task specific parameters

Tasks are assigned specific parameters that provide additional options and flexibility to facilitate their completion. The transformers.AutomaticSpeechRecognitionPipeline.call() method has a promising return_timestamps parameter that sounds like the subtitling of videos.

transcriber = pipeline(model="openai/whisper-large-v2", return_timestamps=True)

transcriber("https://huggingface.co/datasets/Narsil/asr_dummy/resolve/main/mlk.flac")The model inferred the text and also produced output when different sentences were pronounced, as can be seen.

{'text': ' I have a dream that one day this nation will rise up and live out the true meaning of its creed.', 'chunks': [{'timestamp': (0.0, 11.88), 'text': ' I have a dream that one day this nation will rise up and live out the true meaning of its'}, {'timestamp': (11.88, 12.38), 'text': ' creed.'}]}Vision pipeline

A pipeline() is practically identical to its use for vision tasks. Detail your task and transmit your image to the classifier. A base64-encoded image, a local path, or even an image link. For example, what is in the image?

from transformers import pipeline

vision_classifier = pipeline(model="google/vit-base-patch16-224")

preds = vision_classifier(

images="https://exnrt.com/wp-content/uploads/2022/03/3-2.jpg"

)

preds = [{"score": round(pred["score"], 3), "label": pred["label"]} for pred in preds]

preds

[{'score': 0.733, 'label': 'notebook, notebook computer'},

{'score': 0.151, 'label': 'laptop, laptop computer'},

{'score': 0.028, 'label': 'desktop computer'},

{'score': 0.015, 'label': 'screen, CRT screen'},

{'score': 0.012, 'label': 'modem'}]Using pipelines on a dataset

The pipeline can also perform inference on a large dataset. The simplest way to do this is to use an iterator:

def data():

for i in range(1000):

yield f"My example {i}"

pipe = pipeline(model="openai-community/gpt2", device=0)

generated_characters = 0

for out in pipe(data()):

generated_characters += len(out[0]["generated_text"])The pipeline takes into account the iterable input and retrieves data while continuing to process on GPU using DataLoader. The GPU can be fed as quickly as possible without requiring any memory for the entire dataset, making it crucial. If batching is feasible, it might be beneficial to modify the batch_size parameter in this context. The most straightforward approach to iterate over a dataset is by selecting one from hugging face Datasets:

# KeyDataset is a util that will just output the item we're interested in.

from transformers.pipelines.pt_utils import KeyDataset

from datasets import load_dataset

pipe = pipeline(model="hf-internal-testing/tiny-random-wav2vec2", device=0)

dataset = load_dataset("hf-internal-testing/librispeech_asr_dummy", "clean", split="validation[:10]")

for out in pipe(KeyDataset(dataset, "audio")):

print(out)Using pipeline on large models with 🤗 accelerate

Using accelerate, you can run the pipeline on large models with ease! To begin with, ensure that you have installed Accelerate using pip install Acceleriiate. We will use the Facebook/opt-1.3b example to load our model with device_map=”auto” as the initial step.

# pip install accelerate

import torch

from transformers import pipeline

pipe = pipeline(model="facebook/opt-1.3b", torch_dtype=torch.bfloat16, device_map="auto")

output = pipe("This is a cool example!", do_sample=True, top_p=0.95)By installing bitsandbytes and adding the argument load_in_8bit=True, it is possible to pass models with 8-bit loaded.

# pip install accelerate bitsandbytes

import torch

from transformers import pipeline

pipe = pipeline(model="facebook/opt-1.3b", device_map="auto", model_kwargs={"load_in_8bit": True})

output = pipe("This is a cool machine learning example!", do_sample=True, top_p=0.95)It should be noted that the checkpoint can be substituted with any of the Hugging Face models that support large model loading, such as BLOOM!