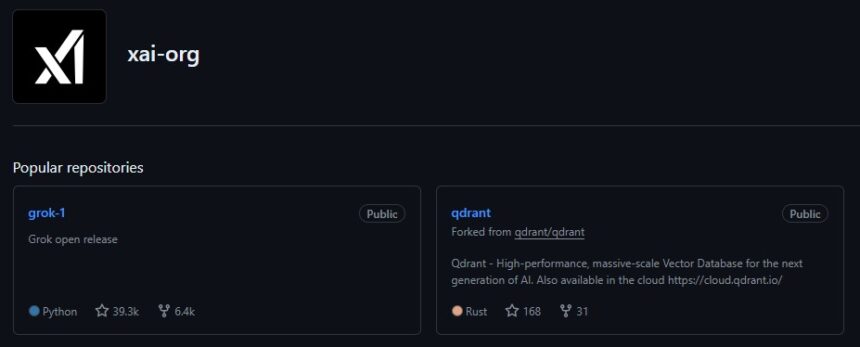

On Sunday, Elon Musk’s AI company, xAI, released the base model weights and network architecture for the Grok-1, a large language model compete with OpenAI’s ChatGPT. The release of open-weights via GitHub and BitTorrent is in line with Musk’s ongoing criticism and legal battle against OpenAI for not making its AI models available to the public.

The Grok-1 model

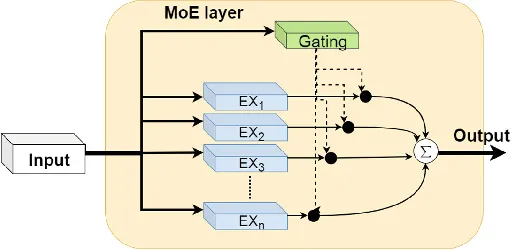

Grok-1 is a basic prototype checkpoint from the pre-training phase, which ended in October 2023. It is not trained for any particular use, such as in chatbot. The model utilizes a Mixture-of-Experts architecture and boasts an impressive 314 billion parameters, with 25% of the weights active. xAI employed a custom training stack built on JAX and Rust to train Grok-1 from scratch.

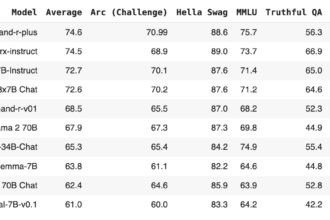

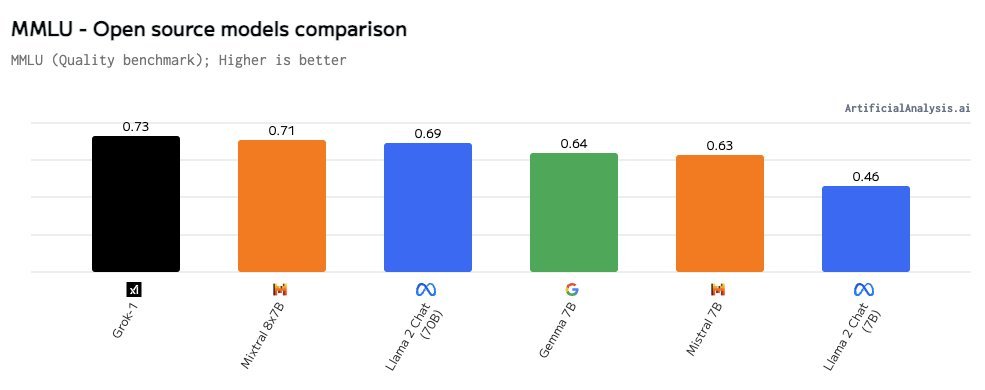

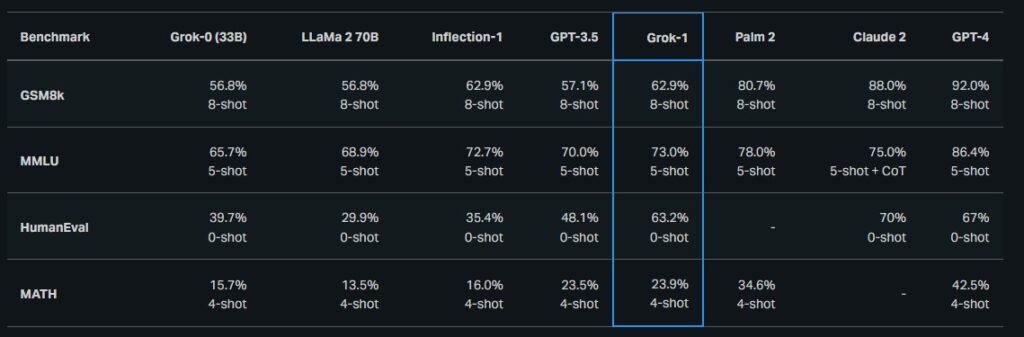

Grok Benchmark:

Grok’s MMLU score is of 73% and it beats Llama 2 69B’s at 68.9% and Mixtral 8x7B’s at 71%.

Key Technical Specifications:

- 314 billion parameters

- Mixture-of-Experts architecture with 8 experts (2 active per token)

- SentencePiece tokenizer with 131,072 tokens

- Maximum context window of 8,000 tokens

- Support for 8-bit quantization and activation caching

- 64 layers

- 48 attention heads for queries

- Support for Rotary Positional Embeddings (RoPE)

- 6,000-dimensional internal embeddings

The large size of Grok-1 necessitates substantial hardware resources to be run on a local level. Approximately 8-bit inference requires an NVIDIA DGX H100 system (with 8 GPUs, each with 80GB of VRAM), while 4-bit data usage necessitates around 320GB.

This release contains code and weights associated with Grok-1 that are licensed under Apache 2.0.

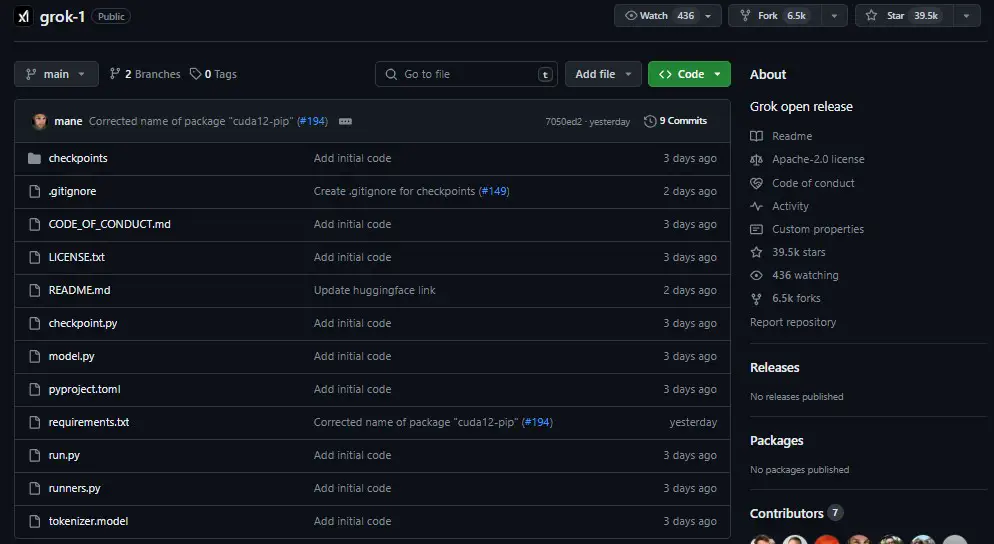

How to download Grok

Instructions for downloading and running Grok-1 are explained in this GitHub repository. You can clone the repository to your local machine. This repository contains example JAX code to load and run the Grok-1 open-weight model.

To Download this Model:

git clone https://github.com/xai-org/grok-1.git && cd grok-1

#git clone https://github.com/xai-org/grok-1.git

#cd grok-1pip install huggingface_hub[hf_transfer]

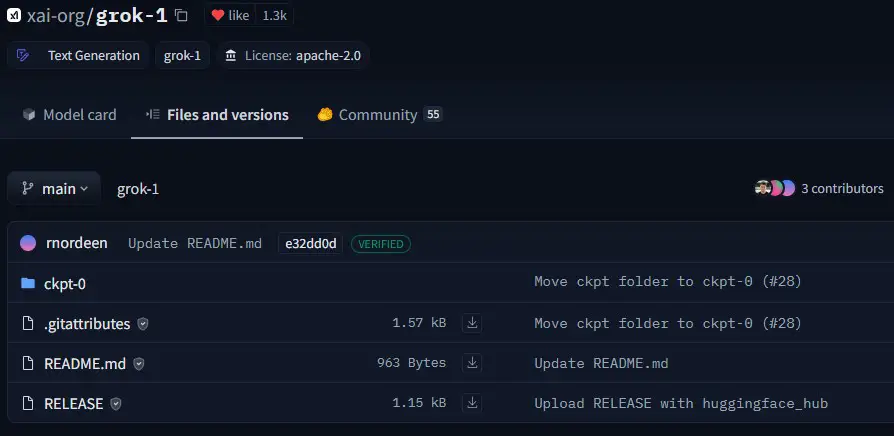

#pip install -U huggingface_hubhuggingface-cli download xai-org/grok-1 --repo-type model --include ckpt-0/* --local-dir checkpoints --local-dir-use-symlinks FalseUse can aslo directly download this model from Hugging Face 🤗 Hub xai-org/grok-1:

# You can also clone the repository, It's Simple

git lfs install

git clone https://huggingface.co/xai-org/grok-1Make sure to place the ckpt-0 directory in the checkpoints folder as in GitHub.

Note: When you directly clone the model from hub, make sure that the others file like run.py are also downloaded otherwise installed them manually from github. Check the image below; the files are not there because the model currently does not have a HF Transformers format.

You can also download its all-model weights by using a torrent client and this magnet link:

magnet:?xt=urn:btih:5f96d43576e3d386c9ba65b883210a393b68210e&tr=https%3A%2F%2Facademictorrents.com%2Fannounce.php&tr=udp%3A%2F%2Ftracker.coppersurfer.tk%3A6969&tr=udp%3A%2F%2Ftracker.opentrackr.org%3A1337%2FannounceRun Model

Now you’re model is ready. To test the code, run the following commands:

pip install -r requirements.txt

python run.pyOutput:

You can Change your Prompt in run.py file.

#A piece of code taken from run.py file

inp = "The answer to life the universe and everything is of course"

print(f"Output for prompt: {inp}", sample_from_model(gen, inp, max_len=100, temperature=0.01))The model’s excessive size (314B parameters) necessitates a machine with ample GPU memory to test the model using the example code.

Gork-1 PyTorch version for transformers

PyTorch Version of Grok-1 model and weights is now available on hugging Face. The original modeling written in JAX was translated into a PyTorch version. The weights were converted by mapping tensor files with parameter keys, de-quantizing the tensors with corresponding packed scales, and then saved to a checkpoint file using torch APIs.

Usage

import torch

from transformers import AutoModelForCausalLM

from sentencepiece import SentencePieceProcessortorch.set_default_dtype(torch.bfloat16)

model = AutoModelForCausalLM.from_pretrained(

"hpcai-tech/grok-1",

trust_remote_code=True,

device_map="auto",

torch_dtype=torch.bfloat16,

)

sp = SentencePieceProcessor(model_file="tokenizer.model")text = "Replace this with your text: What is Fine Tuning?"

input_ids = sp.encode(text)

input_ids = torch.tensor([input_ids]).cuda()

attention_mask = torch.ones_like(input_ids)

generate_kwargs = {} # Add any additional args if you want

inputs = {

"input_ids": input_ids,

"attention_mask": attention_mask,

**generate_kwargs,

}

outputs = model.generate(**inputs)OutPut:

Implementation of Grok-1 Tokenizer

A Hugging Face version of the Grok-1 tokenizer, adapted from xai-org/grok-1, is now available. Grok-1 can now be utilized with Hugging Face libraries such as Transformers, Tokenizers, and Transformers.js.

Transformers/Tokenizers:

from transformers import LlamaTokenizerFast

tokenizer = LlamaTokenizerFast.from_pretrained('Xenova/grok-1-tokenizer')

assert tokenizer.encode('hello world')Guidelines to download the model from the Hugging Face

Download a single file. It’s just a example 🤗.

from huggingface_hub import hf_hub_download

hf_hub_download(repo_id="xai-org/grok-1", filename="config.json")Or an entire repository

from huggingface_hub import snapshot_download

snapshot_download("xai-org/grok-1")Files will be downloaded in a local cache folder. More details in this guide.

Conclusion

Limited access hinders progress in AI models: While some fully open-source models exist (e.g., Mistral and Falcon), many leading models are either closed-source or have restricted licenses. Companies like Meta release “free” research models (Llama 2) but restrict commercial use and further development. This limits valuable feedback and innovation from the wider research community.

Paid exclusivity can backfire: The Grok chatbot initially required a paid subscription for access. This aimed to position Grok as a unique, edgy alternative. However, our testing found it underwhelming compared to freely available options like ChatGPT or Gemini. This suggests limited access can hinder a model’s ability to compete and improve.

Facing an issue? Ask in comments or join the discussion on Hugging Face.