What is Google Gemini?

Google Gemini is a family of multimodal artificial intelligence (AI) large language models (LLMs) developed by Google DeepMind with capabilities in language, audio, code, and video understanding. It is Google’s strategic response to ChatGPT, incorporating AlphaGo-inspired techniques and potentially accessing Google’s extensive proprietary training data from various services.

Background

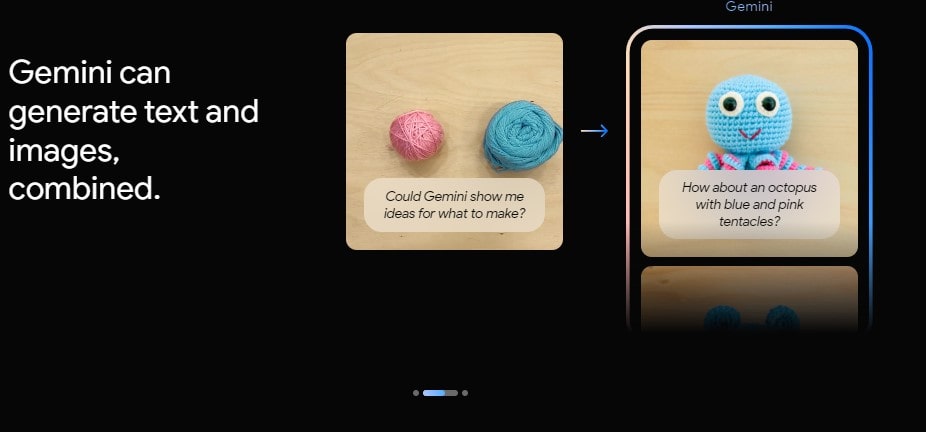

Gemini aims to challenge ChatGPT’s dominance in the generative AI space, underscoring Google’s commitment to AI innovation and competition in the rapidly growing generative AI market. As a multimodal model, Gemini can complete complex tasks in math, physics, and other areas, as well as understand and generate high-quality code in various programming languages. It is described as capable of understanding not just text but also images, videos, and audio, making it a powerful tool for a wide range of applications.

Gemini 1.0, announced on Dec. 6, 2023, is Alphabet’s latest AI marvel from Google DeepMind. Spearheaded by co-founder Sergey Brin, it surpasses the previous Pathways Language Model (PaLM 2) and is seamlessly integrated into various Google technologies. Notably, Gemini powers the Google Bard AI chatbot, supplanting PaLM 2.

The emergence of ChatGPT in November 2023 prompted Google’s strategic response, leading to a code red declaration and substantial investments. This initiative yielded the launch of Google Bard and, notably, Google Gemini on December 6, 2023. The ongoing journey suggests Google could potentially dominate the AI landscape, challenging ChatGPT’s reign.

Gemini, currently accessible via Google Bard and Google Pixel 8, is set to integrate into other Google services in the coming months.

Google’s most advanced AI model explained

Gemini, introduced with diverse model sizes, caters to specific use cases. The top-tier Ultra model, optimized for intricate tasks, is set for availability in early 2024. Meanwhile, the Pro model, emphasizing performance and scalability, powers Google Bard and is accessible in Google Cloud Vertex AI and AI Studio since Dec. 13, 2023. Additionally, Gemini Pro fuels Google AlphaCode 2 for generative AI coding.

The Nano model targets on-device applications, featuring two versions: Nano-1 with 1.8 billion parameters and Nano-2 with 3.25 billion parameters. Notably, Nano finds integration in the Google Pixel 8 Pro smartphone.

As the generative AI market anticipates a staggering $1.3 trillion value by 2032, Google is strategically investing to uphold its leadership in AI development.

Capabilities

Gemini, designed for multimodal functionality, integrates natural language processing to comprehend input queries and data. With image understanding and recognition capabilities, it parses complex visuals without external OCR. The model excels in multilingual tasks, performing mathematical reasoning, summarization, and generating captions in various languages. Gemini’s architecture supports handwritten notes, graphs, and diagrams, enabling it to solve complex problems by ingesting text, images, audio waveforms, and video frames.

Created to be highly efficient, Gemini facilitates tool and API integrations, positioning it for future innovations like memory and planning. The model is available in various sizes and capabilities, following responsible development practices to mitigate bias and potential harms.

Notably, Gemini stands out for its native multimodality, trained end to end on diverse datasets, enabling cross-modal reasoning across audio, images, and text sequences. Google emphasizes responsible development, ensuring ethical and unbiased AI practices across all Gemini models.

What can Gemini do?

The Google Gemini models excel in a wide range of tasks across diverse modalities, including text, images, audio, and video. Their multimodal nature allows for seamless integration and understanding of different data types, leading to the generation of meaningful outputs.

Gemini’s capabilities encompass:

- Text Summarization: Gemini models can effectively summarize content from various data sources.

- Text Generation: Gemini can generate text based on user prompts, including Q&A-type chatbot interactions.

- Text Translation: Gemini’s multilingual capabilities extend to over 100 languages.

- Image Understanding: Gemini excels at interpreting complex visuals, such as charts, figures, and diagrams, without relying on external OCR tools. It can generate image captions and answer visual-based questions.

- Audio Processing: Gemini supports speech recognition in over 100 languages and can perform audio translation tasks.

- Video Understanding: Gemini can process and understand video clip frames to answer questions and generate descriptions.

- Multimodal Reasoning: A key strength of Gemini is its ability to reason across different modalities.

- Code Analysis and Generation: Gemini can analyze, explain, and generate code in popular programming languages, including Python, Java, C++, and Go.

Versions of Gemini

Google’s Gemini model is a flexible and scalable AI, operating across data centers and mobile devices. It comes in three sizes, each tailored for specific tasks:

1. Gemini Nano

Designed for on-device tasks on smartphones like the Google Pixel 8, it excels in efficient AI processing for applications such as suggesting replies and text summarization within chat apps.

2. Gemini Pro

Running on Google’s data centers, Gemini Pro powers the latest version of the AI chatbot, Bard. It ensures fast response times and adeptly handles complex queries.

3. Gemini Ultra

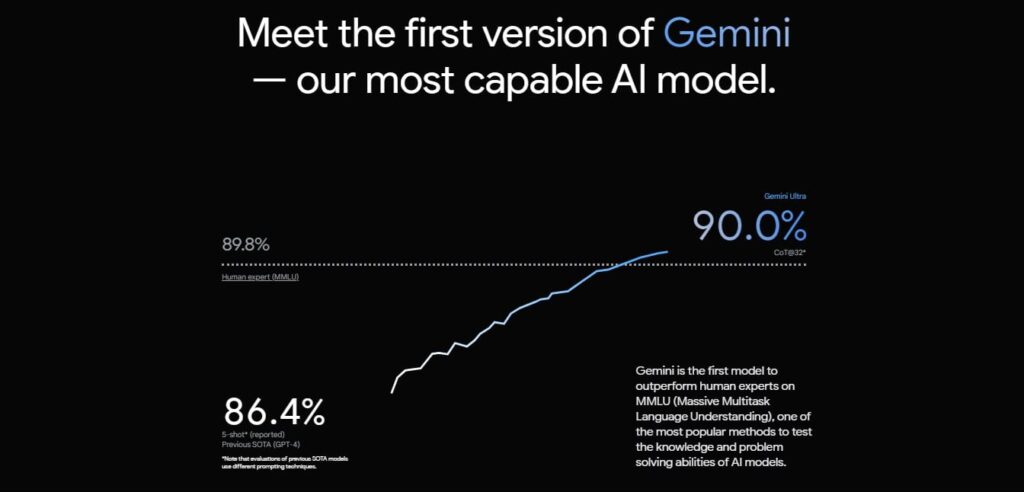

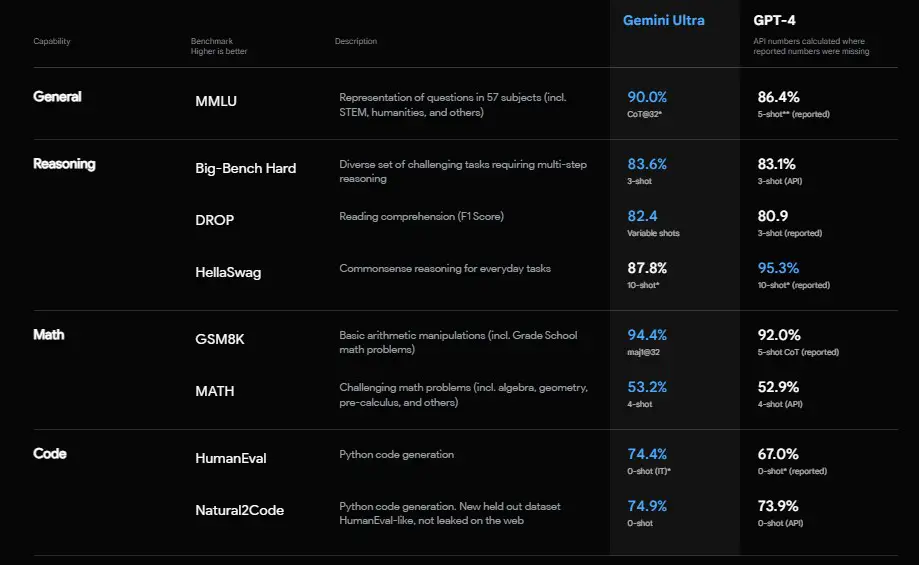

Although not yet widely available, Gemini Ultra stands as the most capable model, surpassing benchmarks in large language model research. Geared towards highly complex tasks, it is undergoing testing before widespread release.

How does Google Gemini work?

Google Gemini undergoes training using a large corpus of data. After training, it uses various neural network techniques to understand content, answer questions, generate text and generate output.

Specifically, Gemini LLMs leverage a neural network architecture based on a transformer model. This architecture is optimized to process a wide range of contextual sequences across diverse data types, including text, audio, and video. Google DeepMind used an efficient attention mechanism in the Transformer Decoder to simplify the processing of long context models spanning multiple modalities.

Gemini models are trained on diverse multimodal and multilingual datasets containing text, images, audio and video. Google DeepMind used advanced data filtering to improve training. As different Gemini models are deployed to support specific Google services, there is a targeted fine-tuning process that can be used to further optimize the model for a specific use case.

During both training and estimation phases, Gemini benefits from Google’s latest TPUv5 chips. These are optimized, custom AI accelerators designed to efficiently train and deploy large models.

A key challenge for LLMs is the potential for bias and toxicity. According to Google, Gemini conducted extensive security testing and mitigation measures to address risks such as bias and toxicity, with the goal of providing an LLM security degree.

To further validate Gemini’s functionality, the models were tested on educational benchmarks spanning the language, image, audio, video, and code domains.

How can you access Gemini?

Gemini is now available in its nano and pro sizes on Google products, such as the Pixel 8 phone and the Bard chatbot, respectively. Google plans to integrate Gemini into its search, ads, Chrome and other services over time.

Developers and enterprise users will be able to access Gemini Pro through the Gemini API in Google’s AI Studio and Google Cloud Vertex AI starting December 13. Android developers will have access to Gemini Nano through AICore, which will be available on an early preview basis.

Google’s Gemini AI is a Serious Threat to ChatGPT

Will Gemini Take the Crown from ChatGPT?

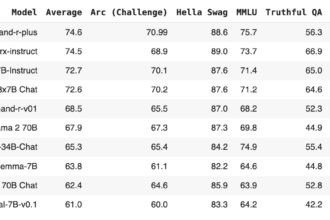

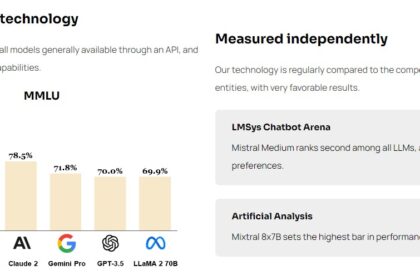

Google’s Gemini model stands out as one of the largest and most advanced AI models, with its release eagerly anticipated, especially the Ultra model. Compared to popular models powering AI chatbots, Gemini’s native multimodal characteristic sets it apart. While models like GPT-4 primarily focus on text, Gemini excels in multimodal tasks without relying on plugins or integrations.

The competition between Gemini and ChatGPT has been a hot topic, with ChatGPT reaching over 100 million monthly active users this year. Initially, Gemini’s ability to generate text and images was seen as a differentiator, but OpenAI’s announcement on the 25th of September 2023 that ChatGPT would support voice and image queries changed the landscape.

Now, with OpenAI experimenting with a multimodal approach and connecting ChatGPT to the internet, the discussion around Gemini’s potential advantage becomes more pronounced. Google’s vast array of proprietary training data becomes a significant advantage. Gemini can process data from various services like Google Search, YouTube, Google Books, and Google Scholar, potentially leading to more sophisticated insights and inferences. Reports suggest that Gemini is trained on twice as many tokens as GPT-4, further enhancing its capabilities.

Additionally, the partnership between Google DeepMind and Brain teams poses a challenge to OpenAI, pitting them against a team of renowned AI researchers, including Google co-founder Sergey Brin and DeepMind’s Paul Barham. This team has expertise in applying techniques like reinforcement learning and tree search, which were instrumental in AlphaGo’s victory over a Go world champion in 2016.

Gemini’s multimodality, allowing it to reason across various media formats, is another key aspect. While not unique, most multimodal models are either not publicly available, challenging to use, or task-specific. This has left GPT-4 as the dominant player in the space. Experts eagerly anticipate a strong alternative to GPT-4 and its vision model, as there is currently no other model in the same class.

Moreover, Gemini’s influence extends to powering both Bard and Pixel 8 devices, showcasing its integration into Google’s ecosystem. The significance of proprietary training data, especially from services like Whisper, further emphasizes Gemini’s potential edge.