In the past few years, Google has introduced various user-friendly tools to support data scientists and machine learning engineers. These tools, such as Google Colab, TensorFlow, BigQueryML, Cloud AutoML, and Cloud AI, have been instrumental in making AI more accessible to organizations.

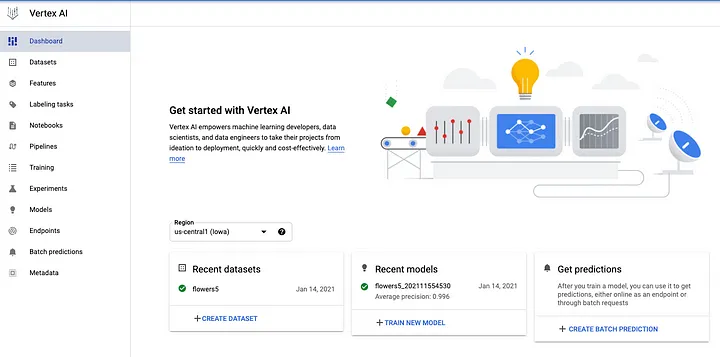

However, managing multiple tools for tasks like data analysis, model training, and deployment can be tedious. To streamline this process, Google has introduced “Vertex AI,” consolidating all its cloud offerings into a unified platform to make the entire ML process accessible without the need for coding.

This opens up ML programming to a broader range of users beyond machine learning engineers. Additionally, Vertex AI’s improved workflow and user-friendly interface accelerate the work of data scientists. Let’s explore Vertex AI’s features and use-cases!

What is Vertex AI?

Vertex AI is a machine learning (ML) platform developed by Google Cloud, serves as a unified solution for building, training, and deploying ML models and AI applications. It facilitates the customization of large language models (LLMs) like Gemini while streamlining data engineering, data science, and ML engineering workflows. By providing a common toolset, Vertex AI encourages collaborative efforts among teams, enabling seamless application scaling with the benefits of Google Cloud.

Under the Vertex AI umbrella, Google offers all its AI cloud services in an end-to-end workflow, encompassing tasks such as data preprocessing, analysis, AutoML, and other advanced ML components.

Developers, data scientists, and researchers can leverage pre-trained models or customize large language models (LLMs) for use in their AI-powered applications. Users can also train and deploy models on Google Cloud infrastructure, including AI Platform, Kubernetes, and AutoML. It is designed to be user-friendly for beginners and time-saving for experts, requiring 80% fewer lines of coding to train models.

Vertex AI options for model training and deployment

Vertex AI unifies the ML workflow with a single UI and API, integrating with open-source frameworks like PyTorch and TensorFlow. Pre-trained APIs simplify integration for video, vision, language, and more.

- AutoML enables easy coding-free training for tabular, image, text, or video data.

- Custom training provides complete control, allowing the use of preferred ML frameworks, custom training code, and hyperparameter tuning.

- Model Garden extends capabilities for discovering, testing, customizing, and deploying Vertex AI and open-source models.

- Generative AI gives access to Google’s large generative AI models for text, code, images, and speech, adaptable to specific needs.

The platform ensures end-to-end data and AI integration, interfacing with Dataproc, Dataflow, and BigQuery through Vertex AI Workbench. Users can build/run ML models in BigQuery or export data to Vertex AI Workbench for execution.

Vertex AI and the machine learning (ML) workflow

Vertex AI simplifies the entire data science workflow right from the start. Organized datasets support the initial steps of data preparation. Data can be labeled and interpreted right on the platform. No need to switch between different services.

At the Vertex AI training stage, Auto ML is available for maintaining image files, videos, text-based data, and tables. If your data is stored in these formats, there is no need to create a custom model. Vertex AI will select the most appropriate model for prediction.

Plus, developers don’t need to compromise on insight. VertexML metadata allows them to record parameters and observations of an experiment. Feature attributes allow users to see Vertex AI’s predictions in even more detail. Feature attributes help you see which features or training code contributed most to results, which forms the basis for further feature engineering.

For other applications, or if developers want more control, they can use custom-trained models from the frameworks and their optimal model architectures instead of pre-trained models. To make this easier, Vertex provides Docker container images as part of their training service.

Vertex Interpretable AI lets you understand the reasoning behind your model’s predictions at the evaluation stage. Vertex AI then comes complete with all software and hardware requirements needed for deployment. After the model deployment phase, users have several options to access details behind model predictions.

Once developers have chosen a task they want to build a machine learning-based app for, they need to digest, analyze and transform the raw data. The next step is to build and train a specific model to perform the task in question. Training models can often be the most time-consuming part of the process.

After that follows the evaluation stage. Here, the model may be proven to be reliable, but it could also show problems. If developers are not happy with their original model, they may choose to use another one and repeat these two stages.

Once the team finds a reliable model, they can deploy their app and run predictive tasks.

Vertex AI’s end-to-end MLOps tools automate and scale projects throughout the ML lifecycle after deploying models. These MLOps tools run on fully-managed infrastructure that can be customized based on performance and budget needs.

The Vertex AI SDK for Python enables running the entire machine learning workflow in Vertex AI Workbench, a Jupyter notebook-based development environment. Collaboration with a team is facilitated through Colab Enterprise, a version of Colaboratory integrated with Vertex AI. Other available interfaces include the Google Cloud Console, the gcloud command line tool, client libraries, and Terraform (limited support).

This section provides an overview of machine learning workflows and how you can use Vertex AI to build and deploy your models.

- Data preparation involves extracting and cleaning the dataset, performing exploratory data analysis (EDA), applying data transformations and feature engineering, and splitting the data into training, validation, and test sets.

- Explore and visualize data using Vertex AI Workbench notebooks, which integrate with Cloud Storage and BigQuery to access and process data faster.

- For large datasets, use Dataproc Serverless Spark from a Vertex AI Workbench notebook to run Spark workloads without managing your own Dataproc clusters.

- Model training includes choosing a training method, using AutoML for code-free model training or custom training for writing your own training code and using preferred ML frameworks. Hyperparameter optimization is done using Vertex AI Vizier and Experiments, and trained models are registered in the Vertex AI Model Registry for versioning and deployment.

- Model evaluation and iteration involve evaluating trained models, making adjustments based on evaluation metrics, and iterating on the model.

- Model serving entails deploying models to production for real-time online predictions or asynchronous batch predictions. Optimized TensorFlow runtime and Vertex AI Feature Store are used for serving and monitoring feature health.

- Model monitoring is performed using Vertex AI Model Monitoring, which alerts on training-serving skew and prediction drift. It helps retrain models for improved performance using incoming prediction data.

Google Vertex AI: Conceptual Architecture

The conceptual architecture of Google Vertex AI is based on the robust serverless platforms, storage, GPUs, TPUs, and databases available on Google Cloud. This infrastructure serves as the cornerstone for Vertex AI’s end-to-end machine learning workflow.

Data scientists can expedite model development by utilizing the pre-built models and tools available in Vertex AI’s Model Garden. These models effectively train massive datasets by utilizing the GPUs and TPUs available on Google Cloud. Model Garden provides models for tasks related to tabular data, NLP, and computer vision.

Developers can link trained models to real-time data from enterprise apps and APIs with the help of AI Platform Extensions. Use cases such as search engines, conversational assistants, and automated workflows are made possible by this.

To enhance a model’s performance over time, extensions link it to APIs for feature extraction and data intake.

For data preparation and analytics, Vertex AI also offers connectors to integrate with BigQuery, Cloud Storage, Dataflow, and BigQuery ML services offered by Google Data Cloud. Through these connectors, datasets can be accessed and managed consistently throughout the Vertex AI workflow.

Vertex AI’s other features, such as Prompt, Grounding, Search, and Conversation, enable developers to create generative AI applications. While Grounding offers options for citations and evidence, Prompot offers predefined prompts and responses. Within apps, search and conversation functionalities offer a Google Search-like experience.

The overall goal of Google Vertex AI’s architecture is to provide an end-to-end AI platform that covers the whole ML lifecycle, from data to deployment and management, through features like AutoML, Model Garden, AI Solutions, and integration with Google Data Cloud. The capacity of Vertex AI to offer a straightforward yet scalable solution for enterprise AI needs is fueled by the strength of Google Cloud’s infrastructure.

Decoding Google’s AI Giants: Cloud AI vs. Vertex AI

| Feature | Google Cloud AI Platform | Google Vertex AI |

|---|---|---|

| Model Development | Supports custom model development using popular ML frameworks like TensorFlow and PyTorch. | Continues to support custom model development, with a unified and integrated environment. |

| AutoML Capabilities | Offers AutoML features for model creation (AutoML Vision, NLP, Tables). | Provides a comprehensive Vertex AutoML toolset with automated processes for various ML tasks. |

| Training Infrastructure | Distributed training on GPUs and TPUs for large-scale ML workloads. | Builds upon AI Platform, offering distributed training and pre-built containerized training jobs. |

| Notebooks | AI Platform Notebooks for collaborative coding. | JupyterLab-based AI Platform Notebooks for streamlined collaboration. |

| Model Serving | Supports model deployment for real-time predictions. | Provides serverless model serving infrastructure for automatic scaling. |

| Monitoring | Offers model versioning, monitoring, and evaluation tools. | Extends monitoring with built-in metrics, dashboards, and Cloud Monitoring integration. |

| Integration | Integrates with various Google Cloud services. | Builds upon AI Platform integration, also connecting with AI Hub for ML components. |

| Scalability | Provides scalability with distributed training on powerful hardware. | Offers automatic scaling for deployed models with serverless infrastructure. |

| Ease of Use | User-friendly interface and workflow. | User-friendly interface with a unified and streamlined development workflow. |

| Unified Platform | Requires separate services for specific tasks. | Offers a unified platform for end-to-end ML development and deployment. |